When you hear the phrase Artificial Intelligence, do you imagine shiny robots, glowing screens, or maybe a sci-fi movie where machines outsmart humans? That’s fun, but here’s the twist: AI isn’t living far away in the future — it’s sitting quietly in your pocket, helping you every single day.

When Netflix knows what show you’ll binge next… that’s AI.

When your bank stops a fraudster from charging your card… that’s AI.

When your phone unlocks just by looking at your face… yep, AI again.

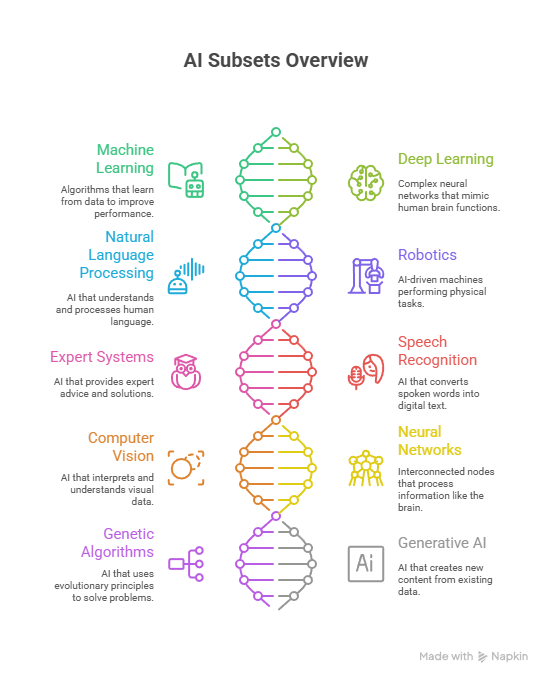

But here’s the part most people don’t realize: AI isn’t one big brain. It’s a family made up of smaller, specialized parts called subsets of artificial intelligence. Each subset has its own personality — the learner, the talker, the creator, the watcher — and together they weave the magic you see in apps, games, hospitals, and even cars.

Curious? Good. Let’s take a walk through this AI family. I’ll show you not only what each subset does but also where it secretly shows up in your daily life. By the end, you’ll probably say: “Wow, I’ve been using AI all along without even knowing it!”

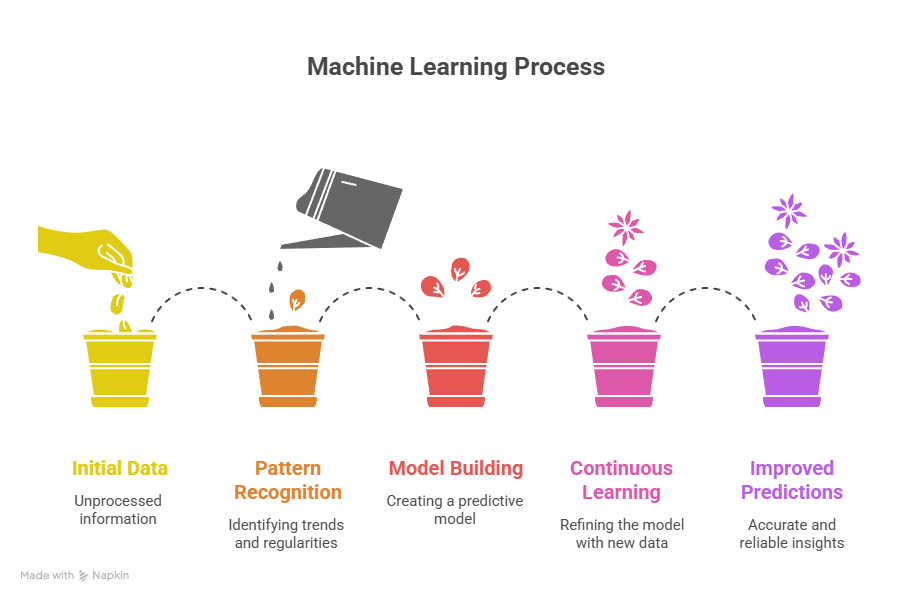

Machine Learning

Machine Learning is like the curious child of the AI family. Imagine teaching a kid math by giving them problem after problem. At first, they make mistakes, but over time, patterns emerge and they get better. That’s what ML does with data. It studies huge amounts of information, notices regularities, and makes predictions — no one has to code every single rule.

Think about Netflix. When it seems to know exactly which series you’ll binge next, Machine Learning is quietly comparing your viewing habits with millions of others. Or picture PayPal. It keeps a silent eye on your spending patterns and instantly raises a red flag if something seems odd, like a charge in a country you’ve never visited. Even your Gmail inbox benefits; its spam filter blocks over one hundred million junk emails daily, thanks to ML spotting suspicious clues.

There are flavors of ML too: supervised learning, which is like having a teacher label every example; unsupervised learning, which feels more like exploring a new city without a guide; and reinforcement learning, closer to playing a video game where you learn by trial and error. In every form, ML is the learner that keeps improving every day.

Deep Learning: The Brainy Genius

If ML is the bright student, Deep Learning is the genius who doesn’t just memorize but truly understands. It relies on neural networks, systems inspired by the human brain. These networks contain layers of artificial “neurons” that pass signals, each layer recognizing more complex features than the last.

Deep Learning powers some of the most jaw-dropping AI feats. Self-driving cars, for instance, don’t just recognize that a light is red — they grasp that it means “stop” in the flow of traffic. Doctors rely on DL tools to scan X-rays and find tumors that human eyes might miss. Even Siri or Google Assistant use it to understand your voice when you’re speaking over the hum of traffic or the chatter in a café.

Did you know Facebook developed a system called DeepFace that can recognize faces with 97 percent accuracy, nearly matching human ability? That’s the power of DL: a system digging deeper into data, layer by layer, until it sees the world as clearly as we do.

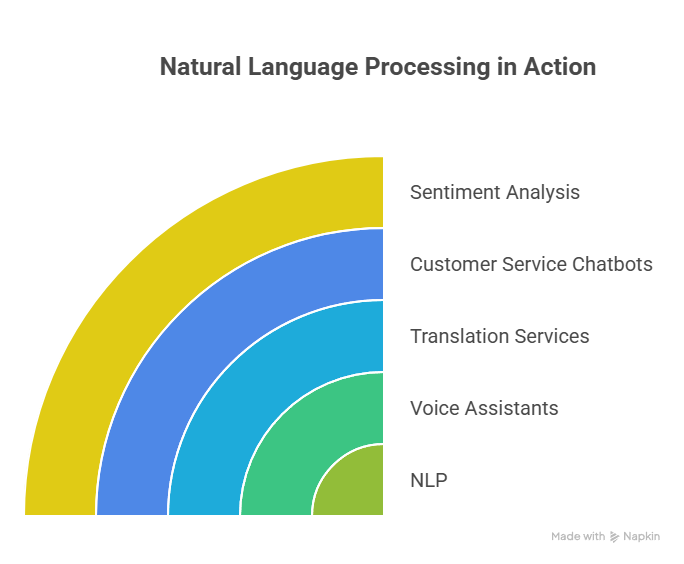

Natural Language Processing (NLP)

We talk in words and emotions, but machines live in numbers. Natural Language Processing, or NLP, is the bridge between us and them. It’s why you can say, “Hey Siri, play my workout playlist,” and your phone understands.

NLP sneaks into your life more often than you think. Google Translate makes multilingual conversations effortless, while customer service chatbots handle your questions long before a human joins the chat. Brands even use NLP to analyze tweets and figure out if customers are thrilled, angry, or just sarcastic.

Here’s a nugget of curiosity: in 2023, a language model passed parts of the U.S. Bar Exam, showing just how far NLP has come. Imagine AI not just chatting, but drafting legal arguments and contracts. NLP is no longer the stiff translator of the past — it’s the talker who actually gets you.

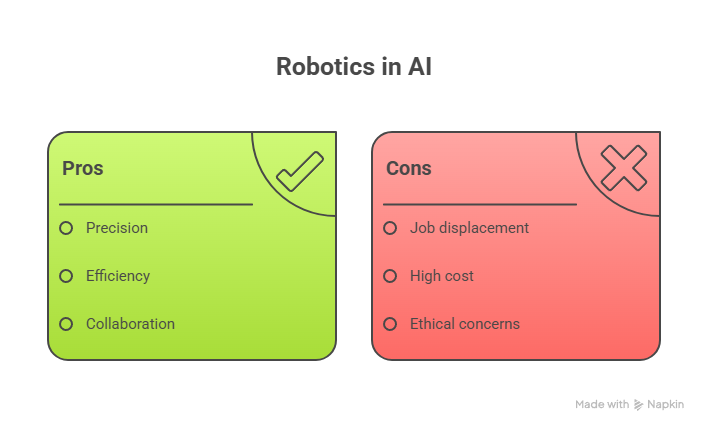

Robotics

Brains are powerful, but sometimes you need brawn. Robotics gives AI arms, legs, and wheels to actually do things. On factory floors, robots assemble cars with a precision no human can sustain for hours. In hospitals, surgical robots assist doctors in delicate operations, holding steady where human hands might shake.

Warehouses are perhaps the best showcase. Amazon’s fleets of robots zoom between shelves, retrieving and moving goods with flawless efficiency. And then there are cobots — collaborative robots — working side by side with humans. Picture yourself lifting heavy boxes with a mechanical partner that never tires.

Here’s a fun fact: Sophia, the humanoid robot, was even granted citizenship in Saudi Arabia. A robot with legal status. If that doesn’t feel like the future arriving early, what does?

Expert Systems: The Wise Advisor

Long before today’s buzz about learning machines, AI relied on something called Expert Systems. Think of them as encyclopedias with logic. They don’t learn; instead, they follow rules crafted by human experts and apply them consistently.

You’ve used one without knowing it. Type “recieve” into Google and watch as it instantly suggests “receive.” That’s an expert system quietly applying spelling rules. Back in the 1970s, MYCIN, one of the first expert systems, helped doctors suggest treatments for infections.

They may seem old-school, but in certain fields where rules are clear, expert systems are like that wise professor who always has the right answer, even if they aren’t great at improvising.

Speech Recognition

Picture yourself driving, hands busy, and you say, “Call Mom.” Instantly, your phone dials. That’s Speech Recognition, turning sound waves into text and commands.

At first, these systems were clumsy, often mistaking words. Now, they’re incredibly sharp. Siri recognizes different voices in your household and adjusts responses accordingly. Cars let you navigate, send texts, or control music without lifting a finger. Accessibility software gives people with disabilities the freedom to interact with devices purely by voice.

Apple, Google, and Amazon have invested billions in making machines better listeners. And here’s a curiosity: today, some systems are so precise they can even detect who is speaking, based on unique vocal patterns. That’s not just recognition — that’s personalization.

Computer Vision

What if machines could see the way we do? Computer Vision makes that possible. It allows systems to analyze and interpret visual data, whether it’s an image, a video, or even a live feed.

You see it every time your iPhone unlocks with Face ID. Hospitals use it when AI systems scan X-rays, spotting diseases earlier than doctors. Self-driving cars rely on it constantly, interpreting road signs, pedestrians, and traffic lights in real time.

Here’s something you might not have realized: Google Photos can group your pictures not just by person, but even by pets. It can tell the difference between two golden retrievers, something many humans would struggle to do. Machines truly have learned how to watch.

Neural Networks

Behind both Machine Learning and Deep Learning lies a structure that makes it all possible: Neural Networks.

They mimic the human brain with nodes (neurons) connected in layers. Each connection adjusts as the system learns, refining its understanding of what’s correct. Over time, neural networks grow astonishingly capable — distinguishing a healthy lung from a diseased one, or recognizing your handwriting when you sign on a tablet.

Here’s a perspective check: in the 1960s, early neural networks could barely tell the difference between simple shapes. Today, deep neural networks can write poetry, generate art, and even pilot vehicles. The wiring that started as a spark has become a full-fledged engine of intelligence.

Genetic Algorithms

Sometimes, AI takes lessons from nature. Genetic Algorithms are modeled on evolution itself. They generate multiple possible solutions to a problem, select the best, and “breed” them together, repeating until the optimal result emerges.

Think about a delivery company with hundreds of trucks. Finding the shortest, most efficient routes would take forever if done manually. Genetic algorithms evolve their way toward the best solution, saving time and fuel. Airlines use them to manage complex scheduling. Factories rely on them to balance production and reduce waste.

NASA even used genetic algorithms to design spacecraft antennas. The designs looked strange, unlike anything human engineers would draw — yet they outperformed traditional designs. Evolution, it turns out, makes a pretty good engineer.

Generative AI & LLMs

Finally, meet the artist of the AI family: Generative AI. This is the subset that doesn’t just analyze data — it produces new content. Whether it’s text, art, music, or code, Generative AI uses massive Large Language Models (LLMs) to invent.

You’ve likely seen it in action. ChatGPT drafts essays and emails in seconds. MidJourney and DALL·E create stunning digital artwork from a single sentence. Law firms now use AI to draft contracts in minutes. Doctors rely on it for real-time note-taking during patient visits.

One fascinating moment came in 2024, when an AI-generated song using the voices of Drake and The Weeknd went viral. It sounded so authentic that record labels scrambled to pull it down. Machines are no longer just copying; they’re creating.

Quick Recap: The AI Family

Here’s the family of AI subsets at a glance:

Machine Learning – the learner.

Deep Learning – the brainy genius.

Natural Language Processing – the talker.

Robotics – the doer with muscles.

Expert Systems – the wise advisor.

Speech Recognition – the listener.

Computer Vision – the watcher.

Neural Networks – the hidden wiring.

Genetic Algorithms – nature’s problem solver.

Generative AI – the creator.

Why It All Matters

Look back at your day. You wake up and ask, “Hey Google, what’s the weather?” That’s speech recognition and NLP. You unlock your phone with Face ID — computer vision. You scroll Netflix, which knows your taste — machine learning and deep learning. Your bank silently shields you from fraud — ML again. And perhaps later, you read an article that was partly drafted by generative AI.

You’ve already interacted with half the AI family before lunch. These subsets are not far-off science fiction. They’re woven into the fabric of your everyday choices, protecting you, entertaining you, and sometimes even inspiring you.

So what’s next? Expect cobots to leave the factory and enter homes, helping the elderly or assisting with chores. Generative AI could one day create personalized movies tailored entirely to your preferences. Specialized LLMs will likely reshape medicine, law, and education.

At the same time, ethics will play a larger role. Questions of bias, privacy, and fairness will decide how AI evolves. And while Artificial General Intelligence — machines matching humans across every task — is still a dream, the subsets we have today are powerful enough to transform industries again and again.

Conclusion

Artificial Intelligence isn’t one giant mystery. It’s a family of learners, talkers, watchers, and creators. Each subset plays its part, and together they’re changing the way we live, work, and play.

So the next time Netflix tempts you with the perfect show, Siri answers your question, or your car responds to your voice, remember — it’s not magic. It’s one of AI’s many subsets, working just for you. And maybe that’s the most magical part of all.

Why do recommendation systems sometimes keep showing me the same kind of stuff, even when I’m bored of it?

That’s the feedback loop problem in machine learning recommenders. They learn from what you click, then show more of that, which makes you click similar things again. Over time, the model mistakes your past curiosity for a permanent taste. Two fixes help: deliberate “exploration” (the system occasionally shows wild-card items to learn new preferences) and explicit signals (you tell the app “show me less like this”). Think of it as teaching the model that you’re a person, not a single mood.

Can AI design things that look “wrong” to humans but still work better?

Yes—especially with genetic algorithms and neural networks in design optimization. These systems evolve shapes the way nature does: by trying, keeping the winners, and mutating them. The results can look odd—lumpy antennas, twisted brackets, strange bridge trusses—but they can outperform neat, human-made designs. If the goal is “strongest for the least material,” nature-style evolution often beats our sense of symmetry.

Is it true a computer can think a turtle is a rifle? How is that even possible?

With computer vision, tiny pixel tweaks or stickers can fool a model into seeing the wrong thing—these are called adversarial examples. The model’s “eyes” don’t work like ours; it focuses on statistical textures and micro-patterns, not common sense. Add a subtle pattern to a toy turtle, and the model’s internal features light up like it’s a rifle. Defenses include training on robust, noisy data and mixing in human-checked rules for sanity.

Can a rule-based expert system ever beat modern machine learning?

In narrow, rule-heavy domains, yes. Expert systems shine when the rules are stable and compliance matters more than creativity—tax eligibility checks, safety checklists, certain medical protocols. They don’t learn new tricks by themselves, but they’re transparent and predictable. When you must explain “why,” a clean rule often beats a black-box score.

Why does my voice assistant understand me in a quiet room but stumble in the car?

Speech recognition works hard to separate your voice from noise, accents, and echoes. In a car you get road rumble, fast-changing acoustics, and hands-free mics far from your mouth. On-device noise models and beamforming mics help, but the system still has to guess. Short, command-style phrases (“Play jazz,” “Call Sam”) win because they narrow the search space.

Can language AI really “get” sarcasm?

NLP can catch some sarcasm with context—past messages, emojis, even timing. But sarcasm lives in shared history and tone, things models only approximate. If a friend texts “Great job” after your phone dies mid-call, you hear the eye-roll. A model sees two positive words. Giving it more context (prior chats, the event) helps, but perfect sarcasm detection is still more human than machine.